10 Best AI Character Tools in 2025 to Achieve Perfect Consistency

If you build characters for games, videos, marketing, or chat experiences, you already know how hard consistency is. Keep the voice, look, and behavior aligned across channels and the illusion breaks. I've noticed teams waste weeks chasing mismatched assets, contradictory backstories, or jittery lip-sync. That’s avoidable with the right toolset.

This guide walks through the 10 best AI character tools in 2025 spanning AI avatar creators, AI storytelling tools, character brains, animation systems, and pipelines for consistent, reusable characters. You'll get practical tips, common pitfalls, and a recommended workflow to ship reliable characters that feel alive and stay on brand.

Target audience: content creators, marketers, game developers, filmmakers, YouTubers, and businesses. If you want consistent AI-driven characters for branding, storytelling, or customer engagement, you'll find the tools and tactics here useful.

Why character consistency matters (and what it really means)

Consistency isn't just visual. It’s voice, behavior, memory, knowledge, and timing. A consistent character looks the part, talks the same way, responds predictably to prompts, and keeps its backstory straight. Mess up one of those and you lose trust quickly.

In my experience, teams obsess over pixel-perfect renders but neglect persona rules. A perfect face with a wandering personality is still inconsistent. Focus first on persona definition, then lock in the visual and behavioral layers.

Good consistency means:

- Stable voice and tone across channels.

- Predictable behavior according to defined personality rules.

- Persistent memory and context where appropriate.

- Reproducible visuals and animation that match the persona.

- Governance: guardrails, safety filters, and version control.

What to look for in AI character tools

Different products solve different layers. Choose tools that map to your priorities. Below are the practical features I prioritize when evaluating any AI character tool:

- Persona engine: ability to author and lock down personality traits, rules, and backstory.

- Memory and state: built-in long- and short-term memory, or easy integration with knowledge stores.

- Multimodal output: image/avatar, voice, animation, and text without stitching too many brittle tools together.

- Versioning & governance: character consistency software features like rollbacks, audits, and access controls.

- Integrations: APIs, Unity/Unreal plugins, streaming SDKs, and common pipelines.

- Export fidelity: reproducible models/weights, fine-tuning, and the ability to freeze a persona for deployment.

- Ease of iteration: preview tools, quick edits, and local testing environments.

How I evaluated these tools

I looked at stability, persona controls, multimodal capabilities, integration with game/film pipelines, and real-world use cases. I also considered pricing transparency and developer ergonomics because if it's painful to iterate, you won't ship consistent characters.

Pro tip: always test a candidate tool end-to-end with a short internal pilot that simulates a real customer interaction. That exposes corner cases fast.

Top 10 AI character tools in 2025

Below are the tools I recommend across categories from full-stack virtual character builders to specialized animation and voice systems. Each entry includes what it does best, when to use it, and common pitfalls.

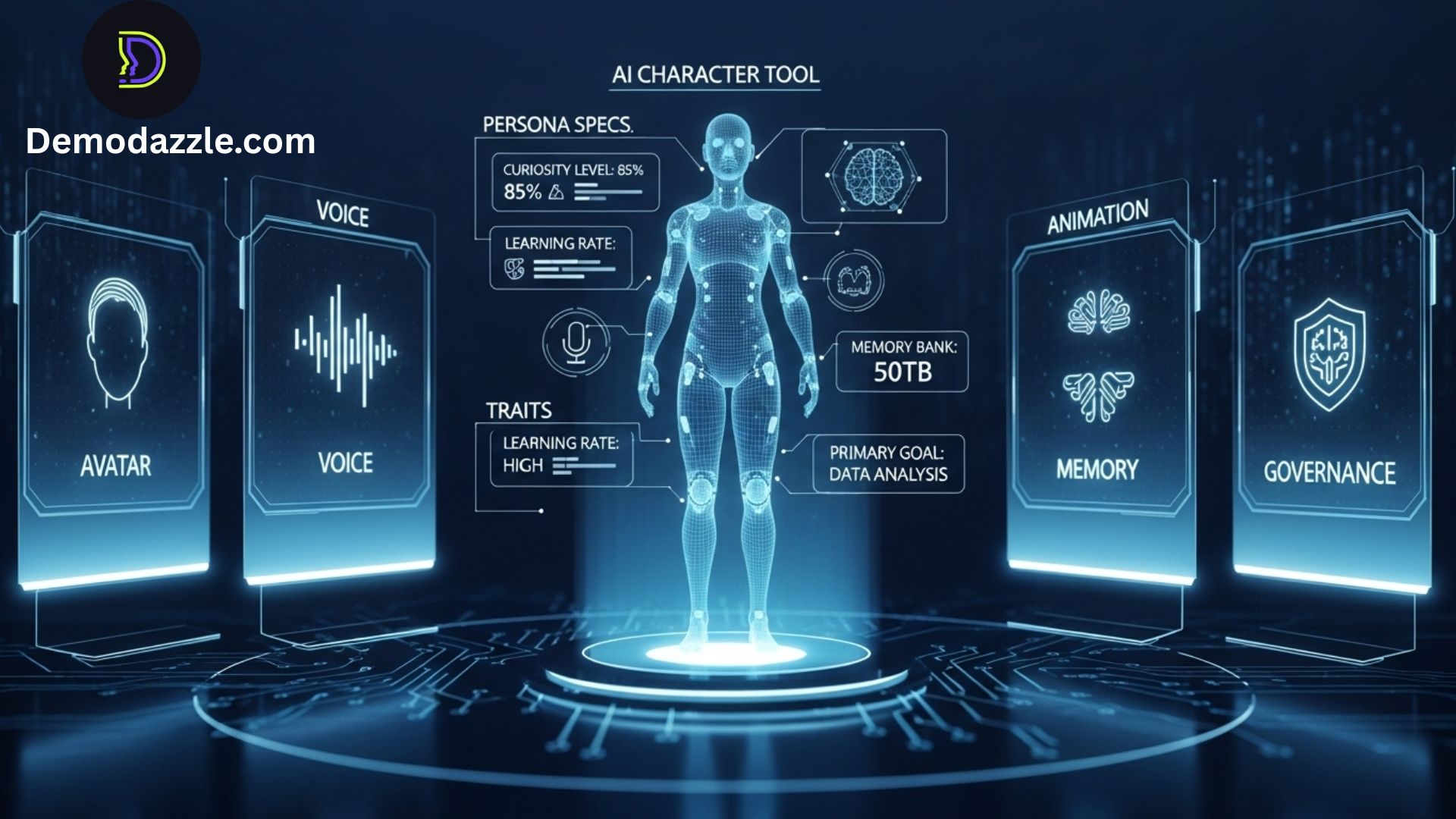

1. Demodazzle full-stack character consistency platform

Why it’s here: Demodazzle focuses on consistent, reusable characters across content types. It's built for marketing teams, game studios, and storytellers who need a single source of truth for persona, assets, and behavior.

- Standout features: persona templates, centralized style guides, versioned character profiles, visual avatar builder, text & voice persona controls, and integration APIs.

- Best for: businesses and creators who want to maintain consistent brand characters across video, chat, and interactive experiences.

- Consistency strengths: character consistency software capabilities like persona locks, access control, and audit logs. You can freeze a persona and deploy it across channels.

- Tips: Start with a minimal persona spec (voice + three core behaviors). Use Demodazzle’s export options to seed downstream tools.

- Watchouts: Don’t treat it as a one-click magic box for creative direction you still need scripts, voice casting, and art polish.

In short, Demodazzle is a great place to centralize character rules, especially when multiple teams touch the same persona.

2. Inworld AI conversational NPC & character brains

Why it’s here: Inworld builds conversational AI characters with detailed internal goals, memory, and emotion models. It's tailored for games, simulations, and immersive experiences.

- Standout features: rich persona authoring, timeline-based memory, emotion graphs, Unity and Unreal SDKs, and tools to simulate interactions at scale.

- Best for: game developers and VR/AR creators needing believable NPCs that remember player actions and show consistent behavior.

- Consistency strengths: durable memory and persona constraints keep NPC responses aligned across sessions.

- Tips: Use conversation playbooks and pre-baked fallback responses to avoid unexpected behavior. Test edge flows where players probe the NPC with uncommon questions.

- Watchouts: Overloading memory with irrelevant details makes the system noisy. Curate what the character should remember.

3. Character.ai community-driven character generator

Why it’s here: Character.ai made conversational characters mainstream. It’s fast to prototype personalities and test voice/tone with users. The platform’s community models show how characters behave in the wild.

- Standout features: easy persona creation UI, community sharing, rapid iteration, and conversational tuning knobs.

- Best for: rapid prototyping and user-testing character concepts for marketing or social projects.

- Consistency strengths: simple persona controls let you define baseline behaviors but lacks deep governance for enterprise-scale deployments.

- Tips: Use Character.ai to validate tone-of-voice before locking in a persona on your production platform.

- Watchouts: Community-shared models can diverge over time export what you need and keep a canonical version elsewhere.

4. MetaHuman Creator (Unreal) hyperreal 3D avatars

Why it’s here: If visual fidelity matters, MetaHuman is hard to beat for realistic 3D characters. Pair it with Unreal’s animation stack for top-tier visuals in film and AAA games.

- Standout features: photoreal faces, detailed rigging, hair/cloth systems, and tight Unreal Engine integration.

- Best for: filmmakers and game devs needing lifelike avatars with cinematic animation pipelines.

- Consistency strengths: consistent facial rig and asset versioning inside Unreal, which helps when multiple artists work on the same character.

- Tips: Export consistent look-dev presets and use standardized rigs for motion capture retargeting.

- Watchouts: Unreal projects can get heavy fast; plan your asset pipeline and LODs early.

5. Synthesia AI avatar creator for video content

Why it’s here: Synthesia is the go-to for marketing teams that need on-brand talking-head videos without studio shoots. It’s both an AI avatar creator and a fast video production tool.

- Standout features: realistic talking avatars, multi-lingual voice models, script-to-video workflows, and easy templates for marketing & training.

- Best for: marketers, educators, and internal comms teams who want quick, consistency-controlled videos.

- Consistency strengths: templates and brand profiles keep voice, phrasing, and on-screen assets aligned across videos.

- Tips: Maintain a brand script library and use the same avatar/voice across campaigns for recognizability.

- Watchouts: Deep customization (e.g., bespoke lip shapes) may still need post production for perfect sync.

6. D-ID realistic talking-heads from photos

Why it’s here: D-ID excels at animating photos into speaking avatars with natural lip-sync and expression. It's a lightweight option if you need on-brand spokespersons from images or headshots.

- Standout features: photo-to-video transformation, voice cloning, real-time streaming, and strong privacy features for consented headshots.

- Best for: brands that want consistent spokesperson videos or legacy photo assets turned into short clips.

- Consistency strengths: stable facial animation presets and voice cloning help preserve brand identity across short-form content.

- Tips: Use controlled lighting and headshots to reduce artifacts; keep scripts concise for tight lip-sync fidelity.

- Watchouts: Check legal rights when animating real people, and use watermarking for internal review builds.

7. Reallusion Character Creator + iClone (rigging & animation pipeline)

Why it’s here: Reallusion provides a robust character creation and animation toolset for studios that need detailed rigging, facial animation, and production-friendly pipelines.

- Standout features: parametric character modeling, facial puppeteering, mocap retargeting, and export to game engines.

- Best for: indie studios and mid-size teams that need control over rigging and animation without a huge toolchain.

- Consistency strengths: reusable rigs and animation presets speed consistent reproductions across projects.

- Tips: Build a reusable rig library and standardized animation clips for brand-specific gestures and expressions.

- Watchouts: Pipeline glue may still be needed for live chat or streaming scenarios.

8. DeepMotion AI animation tools & motion capture from video

Why it’s here: DeepMotion converts video into clean motion data and provides AI-driven procedural animation. It's a game-changer if you want natural movement without a mocap studio.

- Standout features: video-to-motion capture, physics-aware retargeting, runtime animation blending.

- Best for: game and app devs who want live or recorded motion data fast and affordable.

- Consistency strengths: motion filters and reusable retargeting profiles help maintain consistent body language across characters.

- Tips: Standardize camera angles for source videos so retargeted animation stays predictable.

- Watchouts: Lower-quality source videos create noisy motion data a small mocap stage can save hours of cleanup.

9. Replica Studios expressive AI voice acting

Why it’s here: Voice matters. Replica Studios offers expressive, character-driven AI voices that can carry persona and emotion consistently across scenes.

- Standout features: emotional voice models, clip-based batching, integration with game engines, and licensing for commercial use.

- Best for: developers and filmmakers needing a large library of consistent voice performances without scheduling actors.

- Consistency strengths: voice presets and emotional weights let you reproduce the same voice style across many outputs.

- Tips: Keep a voice style guide (intonation, pitch, pace) and version your voice presets.

- Watchouts: Some scenes still benefit from human actors; use AI voice as an iterative tool rather than a complete replacement in high-stakes productions.

10. OpenAI Custom GPTs + LangChain (AI storytelling tools & character brains)

Why it’s here: A lot of character behavior now lives in LLMs. Custom GPTs and toolchains like LangChain give you fine-grained control over persona, memory, and external tools (knowledge bases, APIs, content generation).

- Standout features: fine-tuning, prompt templates, tool invocation, retrieval-augmented generation (RAG), and plugin ecosystems.

- Best for: teams building chat-based experiences, story engines, and systems that need real-time access to updated knowledge and services.

- Consistency strengths: you can freeze persona prompts, implement guardrails, and version prompt libraries to maintain consistent speech and decisions.

- Tips: Use small, testable prompt modules and a retrieval store for canonical facts (dates, backstory points, brand facts). Keep a fallback script for contradictions.

- Watchouts: Prompt drift and hallucination are still problems. Invest in RAG, verification layers, and deterministic response tactics for mission-critical flows.

Putting the tools together: Recommended workflows

Most projects benefit from a hybrid pipeline. Here’s a lightweight, practical workflow I’ve used across marketing and game projects.

- Define a canonical persona spec: single doc with voice, three core behaviors, backstory, taboo list, sample phrasings, and visual style notes. Keep it under 2 pages. This is your character style guide.

- Build the “brain”: use Inworld or Custom GPT for conversational logic and memory. Export stable persona prompts and memory schemas.

- Create visuals: MetaHuman or Character Creator for 3D; Synthesia/D-ID for quick 2D talking heads. Bake look-dev presets.

- Produce voice: Replica or Synthesia voices; create voice presets (emotional weights, pace, pitch).

- Animate and polish: DeepMotion for body movement, Reallusion for facial/rigging cleanup, and manual polishing where needed.

- Centralize governance: host persona spec and assets in Demodazzle (or equivalent). Version everything and lock releases to channels (webchat, video, game client).

- Test & iterate: run 50–100 scripted interactions and 20 user-driven tests. Track policy violations, persona drift, and visual/voice mismatches.

- Deploy with monitoring: log conversations, add human-in-the-loop moderation where necessary, and schedule quarterly persona reviews.

That pipeline keeps each layer accountable. Don’t skip the persona spec it’s the glue that stops tools from drifting in different directions.

Common mistakes and pitfalls (and how to avoid them)

Here are practical missteps I see over and over, and how to prevent them:

- No single source of truth: multiple teams iterate on personalities in silo. Fix: centralize the persona spec and lock canonical assets.

- Overfitting to test prompts: characters that excel at demo scripts but fail with real users. Fix: test with real user input and edge cases.

- Neglecting memory curation: dumping everything into long-term memory creates noise. Fix: define what matters major events, relationships, and commitments only.

- Forgetting legal & consent: animating or cloning real people without rights is risky. Fix: document rights and use synthetic avatars when unsure.

- Ignoring latency and bandwidth: high-fidelity models can be slow. Fix: create degraded versions for low-bandwidth contexts and do client-side caching.

- Underestimating voice nuance: wrong cadence undermines personality. Fix: capture examples, build a voice style guide, and use voice presets consistently.

- Skipping governance: no rollback plan when a character goes off-script. Fix: implement moderation hooks and an emergency persona freeze.

Checklist: How to evaluate an AI character tool for consistency

Quick checklist you can use in vendor selection:

- Can I export and version persona specs?

- Does the tool support long-term and short-term memory control?

- Are voice/visual presets reproducible across sessions?

- Does it integrate with my pipeline (Unity/Unreal/Web/Streaming)?

- Is there an audit trail and role-based access?

- What are the licensing terms for commercial use?

- How easy is it to fall back to a static response if the model hallucinates?

- Is there a simple AB test path to compare variations?

Measuring consistency: KPIs that matter

Here are metrics I recommend tracking. These help you quantify whether your character is staying consistent across touchpoints.

- Behavioral drift rate: percentage of interactions that contradict the persona spec.

- Voice match score: automated similarity measure between output and voice/style presets.

- Memory recall accuracy: how often the character references saved facts correctly.

- User trust/affinity: NPS or qualitative feedback specific to the character experience.

- Content divergence incidents: times the character required manual rollback or correction.

Start with a few KPIs and iterate don't try to track everything at once.

Real-world examples short case studies

Here are a few condensed examples I’ve seen or worked with that show how the tools combine in practice.

Marketing persona for a SaaS brand: The team used Demodazzle to define the persona, Synthesia for instructional videos, and Replica for voice-overs. They exported the persona and templates so the social team could repurpose clips without breaking character. Result: faster production and a 25% lift in recognition for the campaign mascot.

NPCs in an indie RPG: Game devs used Inworld for memory-driven NPCs, MetaHuman for key cutscene characters, DeepMotion for in-game locomotion, and Reallusion for facial polish. They scripted a persona spec and saw fewer player-reported inconsistencies. The NPCs retained player-specific choices across DLC.

Internal training bot: HR built a training character with Demodazzle as the governing spec, then deployed chat interactions through Custom GPTs with RAG for company policy. D-ID produced the friendly face from a staff photo. The result: consistent answers and centralized auditability for compliance.

Budget considerations & licensing

Costs vary widely. Here’s a rough breakdown to guide planning:

- Low-budget pilots: Character.ai, D-ID, and open-source LLMs can keep costs down.

- Mid-range: Licenses for Synthesia, Replica, or DeepMotion for production-quality assets.

- High-fidelity/enterprise: MetaHuman + Unreal + Inworld + Demodazzle-like governance add infrastructure costs and studio time.

Important: check commercial licensing terms for voice models, avatar exports, and redistribution rights. Budget for ongoing maintenance persona reviews, voice updates, and model tweaks add up.

Also read:-

- Best AI Logo Generators in 2025: Create Stunning Logos Instantly

- Top Mobile Avatar Maker Apps for iOS & Android in 2025

- AI Agents vs. Chatbots: Cost, Scalability, and ROI Compared

Future trends to watch (so you can stay ahead)

Small predictions you can act on now:

- Interchangeable persona specs: open standards for persona metadata will grow. Start tagging your assets now.

- Hybrid pipelines: real actors + AI blending will be common for high-quality character deliveries.

- Local inference: more on-device models for low-latency interactions, which affects how you design fallbacks and degraded modes.

- Governance layers: expect more tools to offer audit logs and compliance hooks as regulation tightens.

Final tips practical things I do on projects

A few quick habits that save time and headaches:

- Create a one-page persona cheat sheet for every character. Stick it on team docs and in the tool UI if possible.

- Version everything even tiny prompt edits. A simple naming convention prevents costly regressions.

- Automate a weekly smoke test that runs a set of canonical prompts and checks for key facts.

- Always schedule a “consistency sprint” after any major change to visuals, voice, or model upgrades.

- Keep human oversight you’ll need someone to triage edge-case interactions and pivot fast.

Helpful Links & Next Steps

- Book a quick demo: https://bit.ly/meeting-agami

- Try DemoDazzle: www.demodazzle.com

- Learn more on our blog: https://demodazzle.com/blog/

- Book a quick demo: https://bit.ly/meeting-agami

- Try DemoDazzle: www.demodazzle.com

- Learn more on our blog: https://demodazzle.com/blog/

Conclusion

AI character tools in 2025 are powerful, but they’re only as useful as your processes. Pick the right mix for your needs: persona/brains (Inworld, Custom GPTs), visuals (MetaHuman, Synthesia, D-ID), voice (Replica), and animation (DeepMotion, Reallusion). Then centralize governance and iterate with real users.

If you want to speed up consistent character creation across teams, Demodazzle is purpose-built to be that single source of truth persona specs, versioned assets, and deployable profiles. I recommend piloting a single canonical persona through production using the pipeline described above. You’ll catch inconsistencies early and ship with confidence.

FAQs on AI Character Tools in 2025

1. What are AI character tools?

They’re apps or software that let you make digital characters. These tools help you keep the same voice, style, and personality whenever your character shows up—whether in stories, videos, games, or brand stuff.

2. Why does consistency matter?

Because people notice when things feel off. A character that always acts and sounds the same feels more real. It builds trust. It keeps your audience hooked instead of confused.

3. Who actually uses these tools?

Writers, YouTubers, marketers, game devs, teachers, and even businesses. Basically, anyone who wants a character that doesn’t fall apart halfway through.

4. What should I look for in one?

Easy ways to shape the character’s look, voice, and vibe. Tools that work across platforms. Support for many languages. Options for emotions. And maybe even some stats so you can see how your character performs.

5. Are they expensive?

Some are free or cheap. Others cost a lot, especially if they’re made for big companies. It depends on what you need.

6. Do they do both text and voice?

Yeah. Most good ones in 2025 can write, talk, and even show up as video avatars.

7. Do I need to be a tech genius?

Nope. A lot of them are made for beginners. You drag, drop, and go. But if you’re a developer, there are advanced options too.